Meta is banning entry to varied personas to its AI chatbots because it considers introducing new programs to make the usage of AI chatbots safer and handle considerations about teenagers receiving dangerous recommendation from these choices.

This isn’t shocking given the considerations which have already been raised about AI bot interactions, however in actuality, these apply to everybody, not simply teenage customers.

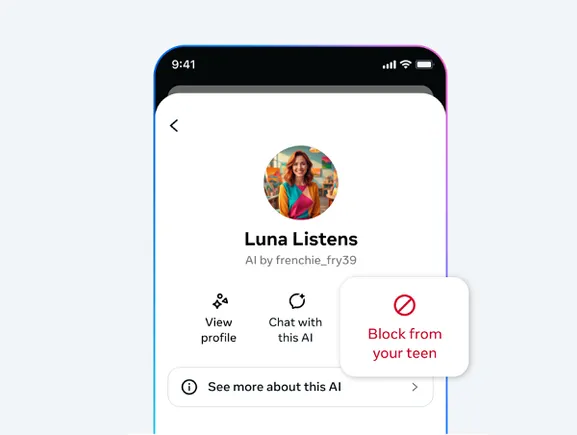

Again in October, Meta rolled out some new choices for folks to regulate how their kids work together with AI profiles throughout the app, amid considerations that some AI chatbots had been providing probably dangerous recommendation and steering to teenagers.

Actually, some AI chatbots have been discovered to be giving harmful steering to teenagers about issues like self-harm, consuming problems, and how you can purchase (and conceal from their dad and mom) medicine.

This prompted the FTC to start investigating the potential dangers of AI chatbot interactions. In response, Meta added management choices that enable dad and mom to restrict how their kids work together with AI chatbots to alleviate potential considerations.

To be clear, Mr. Mehta will not be alone on this. Snapchat additionally needed to change its guidelines concerning the usage of its “My AI” chatbot, and Firm X lately had main points utilizing its Grok chatbot to generate offensive photos.

Generative AI remains to be in its infancy, making it just about inconceivable for builders to guard towards all potential exploits. However nonetheless, such use by youngsters seems to be pretty predictable, reflecting a broader push for speedy progress over threat.

In any case, Meta is implementing new controls for AI bots to handle this concern. Nevertheless, within the meantime, the corporate has determined to briefly fully lower off teenagers’ entry to its AI chatbot (except for MetaAI).

In accordance with Meta:

“In October, we shared that we had been constructing new instruments to provide dad and mom extra perception into how their teenagers are utilizing AI and provides them extra management over the AI characters their children can work together with. Since then, we have began constructing new variations of our AI characters to provide individuals an excellent higher expertise. We’re briefly suspending entry to current AI characters by teenagers world wide whereas we give attention to growing this new model. ”

I imply, it isn’t excellent. Whereas it seems like extra considerations are being raised, it is clear that the FTC investigation is weighing on the minds of meta executives, as potential penalties loom for any abuse.

“Within the coming weeks, teenagers will not have entry to AI characters throughout the app till the up to date expertise is prepared. This is applicable to anybody who has a birthday in our teenagers, and anybody who claims to be an grownup however is suspected to be a teen primarily based on our age prediction know-how. Because of this if we ship on our promise to provide dad and mom extra management over their teenagers’ AI experiences, these parental controls can be utilized to the newest model of AI characters. ”

In different phrases, Meta is tacitly admitting that its AI chatbot has some severe flaws that must be addressed. Moreover, whereas teenagers will nonetheless have entry to the primary Meta AI chatbot, they will be unable to work together with customized bot personas throughout the app.

Will it have a huge impact?

Properly, the analysis outcomes introduced in July final 12 months We discovered that 72% of U.S. teenagers already use an AI companion, and lots of now have common social interactions with digital mates of their alternative.

Couple this with Meta’s efforts to introduce a military of AI chatbot personas to the app as a solution to enhance engagement, and you may seemingly get severe work in the direction of a broader plan.

However the greatest query is how will you safe one of these AI interplay, provided that AI bots be taught from the web and modify their responses primarily based in your queries.

There are various methods to trick an AI chatbot into offering a response that the developer doesn’t need to present, however AI chatbots match language on such a big scale that it’s inconceivable to account for all variations on this regard.

And digitally native teenagers are very savvy and can search for weaknesses in these programs.

So whereas the transfer to limit entry makes good sense, I do not see how Meta can develop efficient safeguards towards future considerations.

Subsequently, keep away from implementing AI chatbots with totally different personas pretending to be actual people.

They aren’t actually wanted below any circumstances. Additionally, speaking to a pc will not be “social” in our extra frequent understanding, so it undoubtedly goes towards the “social” side of social media anyway.

Social media is designed to foster human interplay, and any try and dilute it with AI bots appears at odds with this. I imply, I can see why Meta would need to do this. As extra AI bots work together like actual people in apps, liking posts and leaving feedback, all of this can make actual human customers really feel particular and encourage them to put up extra and spend extra time.

I can see why Meta would need to implement one thing like that, however I do not assume the dangers are totally appreciated, particularly when you think about the potential influence of forming relationships with non-human beings and the way it impacts individuals’s perceptions and psychological states.

Even Meta admits that. It was finished It turns into an issue for youngsters. However in follow, that may be problematic throughout the board, and I do not assume the worth of those bots from a person’s perspective outweighs their potential dangers.

Not less than till we all know extra. Not less than till there’s a giant research that exhibits the influence of AI bot interactions and whether or not it’s good or dangerous for individuals.

So why cease banning it for teenagers and never banning it for everybody till now we have extra perception?

I do know that will not occur, however it looks as if the meta is admitting it is a greater space of concern than I anticipated.